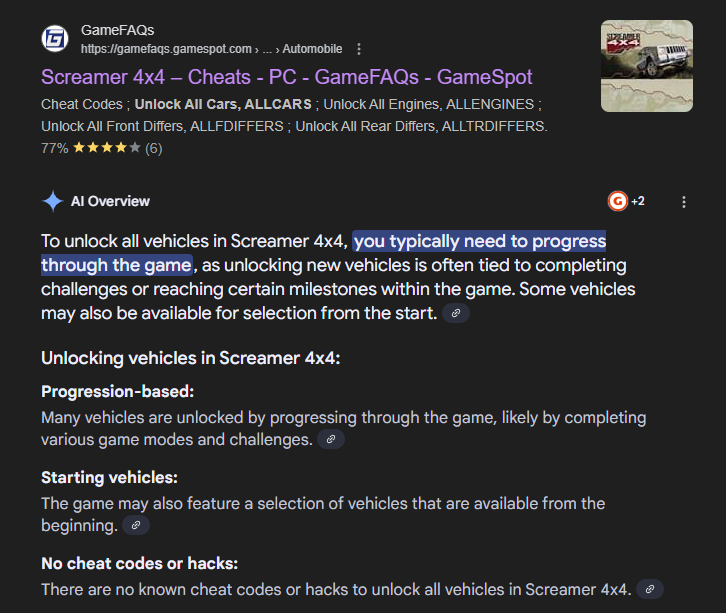

Shown: An AI saying "there are no known cheat codes" next to the link with all the (working) cheat codes.

@sj_zero The sad reality is that the AI devs teams - being the highest paid ones ever in software building history - do not understand how and why "their" LLM's select this output instead of that other one.

They'll invoke "gradient descent" the same way any State refers to "democracy": as a void-covering concept.

But Google is infamous for its role in research in getting the current "AI" boom going, and losing its talent to companies that were actually capable of bringing stuff to market. It's perhaps more competent than Apple, a hardware company that's not good at software, but maybe that's just Apple not being willing to inflict the above sort of lunacy on its customers???

going "Gemini isn't a threat to me" ends up essentially being "Google wielding Gemini isn't a threat to me" in the public's eye.

Contrast with chatgpt, which expresses a lot more basic competence and has people a lot more worried about what what openAI will do with its models.

I'd probably agree with you that separate from the public perception of things that AI as a whole could become something dangerous because of the blind self-interest of companies. It's already bad enough having human beings with a conscience making decisions -- if you have even a low intelligence AI making mass decisions with the sole intent of making the company more powerful, and it doesn't really care that much about the morality or ethics or humanity of the decisions, you can have a lot of evil created that actually does result in the people who caused it to be committed becoming more powerful thereby.

@sj_zero "the psy-op of Google potentially neutering its AI for PR purposes"

I suspect the real reasons are entirely internal. Perhaps some competence issues, but definitely the company being so wedded to social justice, so full of SJWs, the people working on the "AI" must make it "safe" or they'll lose their jobs.

Again I refer to this output, the bad publicity of which I recall was said to have caused it to be withdrawn for fixing.

On the other hand, for your purposes, for your thesis here, does the reason matter??

There's no reason for gemini to lie about cheat codes for a 20 year old video game relating to wokeness.

I don't think what you're saying is without merit though. Maybe the reason is something closer to that.

- replies

- 1

- announces

- 0

- likes

- 0

@sj_zero "There's no reason for gemini to lie about cheat codes for a 20 year old video game relating to wokeness."

There is, actually, the theory of social justice convergence. That the more woke an organization gets, the less competent it becomes at its Official purposes.

Adhering to social justice comes with all sorts of penalties, friction, etc. The best people as we see it don't get promoted or have the power to direct things, SJWs constantly add friction to everything and occasionally wack people through holiness spiraling, orgs get a reputation and lose some of their potential talent pool, etc.

As it was, Google was already suffering from various big company diseases, plus their monorepo constant rotting of product foundations, a major reason for the Google Graveyard along with maintenance for all but the most important products not getting respected or rewarded. And there's a "you only get promotions for new products" one; these products at some point no longer being new???

A modern example of the principal–agent problem: https://en.wikipedia.org/wiki/Principal%E2%80%93agent_problem

Linguistic mimicry is today's bare minimum, an amateur can achieve that on consumer grade hardware. There's still no real memory, short or long, mechanism for AI models. Logic is inferred linguistically without abstraction too, which is still pathetic for "intelligence".